Artificial intelligence isn’t an add-on anymore — it’s the engine behind smarter workflows. Claris FileMaker has quietly become a practical bridge between everyday business processes and large language models (LLMs), embeddings, and automation platforms. This article explains what FileMaker now offers for AI, how it fits into real automation architectures, practical use cases, and clear, research-backed rollout and governance guidance so teams can adopt AI safely and effectively.

What’s new — FileMaker as an AI platform

Recent FileMaker releases add first-class AI building blocks: native script steps to configure AI accounts, call models, create embeddings, and perform semantic (meaning-based) finds — plus admin features to run or manage AI services locally. In short: FileMaker is no longer just a low-code database; it’s a platform that can host, call, and orchestrate AI within operational apps.

The technical picture — how AI fits into a FileMaker workflow

1. Basic architecture

A typical AI workflow with FileMaker looks like this: the FileMaker app (Pro/Web/Go) collects user input → a script step calls a configured AI account (OpenAI, Anthropic, Cohere, or a local model) → the model returns text, embeddings, or structured data → FileMaker uses that output to update records, trigger automations, or surface results in dashboards. Claris provides native script steps (e.g., Configure AI Account, Generate Response from Model, Insert Embedding, Perform Semantic Find) to make these calls simpler and safer.

2. Two common deployment patterns

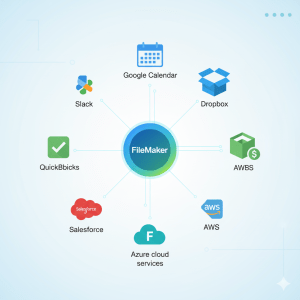

• Cloud LLM + FileMaker — FileMaker sends prompts/requests to a cloud LLM (OpenAI, Anthropic). Easy to start, pay-as-you-go, good for prototypes and non-sensitive data.

• On-prem / local model server + FileMaker — organizations can host models locally (on dedicated GPU servers) and connect them to FileMaker Server’s AI services for privacy, compliance, and cost control. FileMaker 2025 and related admin features explicitly support running model servers for this reason.

3. Embeddings + semantic search (RAG capability)

FileMaker can compute and store embeddings (vector representations of text or images) and perform semantic finds — letting users ask natural-language questions and retrieve contextually relevant records even when keyword matches fail. This is the backbone of Retrieval-Augmented Generation (RAG) inside FileMaker apps.

High-impact use cases (real, deployable today)

- Natural language search across messy records — replace clumsy keyword searches with “find the invoice about the blue Honda delivered in March” and return the right record via semantic search.

- Automated report and email drafting — generate first-draft client communications, follow-ups, or weekly reports from structured data, then let humans review. (FileMaker scripts can call generative endpoints and fill template fields.)

- Data cleaning & normalization — use AI to standardize free-text entries (addresses, product names) and flag ambiguous rows for human review.

- Document ingestion (OCR → classify → extract) — combine OCR pipelines with embeddings to index contracts, invoices, and support tickets for immediate retrieval. Claris supports image embeddings and semantic search on images.

- Smart automation triggers — use AI to classify incoming requests (sales, support, urgent) and automatically route or escalate them through Claris Connect or internal scripts.

- Agent/assistant workflows for internal knowledge — build chat assistants that answer from your company data (RAG) inside FileMaker apps, reducing time to information for frontline staff.

Practical implementation: step-by-step (how to get started)

Step A — Identify the first, high-value micro-workflow

Pick a single pain point (e.g., searching invoices, drafting quotes). Keep scope small: measurable KPIs (time saved, errors reduced).

Step B — Prototype inside FileMaker

Use Configure AI Account to hook a test API key (OpenAI or another provider) and try a Generate Response from Model or Perform Semantic Find script. Rapid prototype → test with 5–10 real users.

Step C — Add embeddings for smarter recall

If searching or RAG is needed, create embeddings from relevant fields (descriptions, notes), store them in a dedicated embedding table, and use Perform Semantic Find against those vectors. Best practice: keep embeddings in a separate related table (improves performance and versioning).

Step D — Move to hardening & governance

Define data-types that must never leave your infrastructure (PII, trade secrets). If necessary, switch to an on-prem AI server or a private-cloud model host to keep sensitive context in-house. FileMaker 2025 supports local AI servers to enable this.

Step E — Cost, throttling, and UX

Introduce caching for repeated prompts, rate limits for expensive calls (LLM tokens), and graceful fallbacks (local rules if API is unavailable). Design UX that shows “AI suggested” content and requires human confirmation for consequential actions.

Best practices & governance (research-based)

- Prompt templates & versioning: use reusable prompt templates (FileMaker can store and reference prompt templates) to keep behavior consistent and auditable.

- Store model & embedding metadata: record which model and embedding version produced each vector so you can re-run or audit results later. (Multiple sources recommend this for long-term accuracy.)

- Human-in-the-loop (HITL): for customer-facing content or decisions with regulatory impact, require human review of AI outputs before publishing.

- Privacy first: if you handle sensitive data, prefer local models or vetted enterprise AI providers; encrypt embeddings and audit access logs. FileMaker’s newer admin features explicitly help keep sensitive data inside your infrastructure.

- Cost monitoring: LLM usage can be unpredictable; instrument token usage, set budgets, and prefer embeddings+semantic search to reduce repeated generation calls.

Common pitfalls (and how to avoid them)

- Blind trust in generated outputs: AI hallucination remains a risk. Always design verification steps for any output used in decisions.

- Embedding sprawl without governance: storing embeddings inline with primary data makes migrations and model changes painful. Use separate embedding tables and include model/version fields.

- Leaking sensitive context to public APIs: sanitize or truncate inputs before passing them to cloud LLMs; consider on-prem models where compliance requires it.

- Over-automation early: automate low-risk, high-frequency tasks first (drafts, triage); save mission-critical automations for later after proving accuracy.

Real-world signals & community evidence

The FileMaker community and Claris documentation demonstrate how quickly these features are being adopted: In addition to community posts and third-party consultancies reporting successful use cases and the emergence of on-premise AI server patterns in 2024–2025, Claris’ AI pages and help center document the native AI script steps and the semantic search story. The marketplace and third-party blogs also provide useful how-tos for integrating OpenAI/ChatGPT with FileMaker, demonstrating that this is not just a theory but is being used.

Example: a small rollout blueprint (2–4 weeks)

Week 0 (planning): pick workflow, KPIs, stakeholders, compliance constraints.

Week 1 (prototype): wire up AI account, create 1–2 scripts (Generate Response + simple UI). Test with sample data.

Week 2 (embeddings & RAG): add embedding generation for the dataset, implement Perform Semantic Find, build a review interface.

Week 3 (pilot): run live pilot with 5–10 users, collect error cases and edge prompts.

Week 4 (harden & launch): add logging, access controls, rate limits, and rollout to target team.

Final takeaways — why FileMaker matters for AI workflows

- Practicality: FileMaker converts AI experiments into operational workflows because it combines data, UI, and scripting in one platform.

- Choice & control: you can start on public LLMs and migrate to private/on-prem models as governance needs rise — Claris supports both approaches.

- Speed to value: native script steps and embeddings cut integration overhead so teams see outcomes faster than building custom stacks from scratch.