Since 2024–2025 Claris has embedded first-class AI building blocks directly into the FileMaker platform: script steps and functions to call models, semantic search, private knowledge-bases, and the ability to use multiple model providers. That means you can now add LLM-powered summaries, natural-language finds, smart document parsing, chatbots, and on-the-fly content generation inside your FileMaker apps — with a developer-friendly workflow and attention to data privacy.

1) What’s actually new (quick, factual snapshot)

- Native AI script steps & functions — FileMaker has built-in script steps that let you send prompts to models and get responses inside scripts (e.g., Generate Response from Model). This brings generative AI directly into app workflows.

- Semantic search / natural language finds — you can perform “finds” using plain-language queries or semantic embeddings to surface relevant records (including images and documents).

- Private knowledge bases & model provider flexibility — FileMaker supports private knowledge-bases for sensitive data and allows multiple model providers (hosted vendors or self-hosted models) so teams can choose cost/privacy tradeoffs.

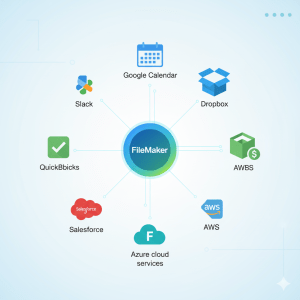

- Tighter Claris ecosystem integration — these AI features are part of the wider Claris platform (FileMaker + Claris Connect + Claris Studio), making it easier to expose AI-enhanced data to web views and connected apps.

2) How FileMaker’s AI fits into app architecture (plain language)

Think of FileMaker as the place where your data, UI, and business logic live. The 2024–25 AI features add a new layer: AI as a scriptable microservice. From a developer POV you have three building blocks:

- Prompting step(s) — send prompts and receive text or structured responses inside scripts.

- Embeddings/semantic index — index documents/images/records so the system can answer “which records are most like this question?”

- Private model / provider connector — choose whether to call a commercial API or a private LLM (self-hosted) for higher privacy/control.

This means your workflows (approvals, ticket triage, reporting) can call AI at decision points — e.g., auto-summarize an invoice, generate a customer reply draft, or find related contracts — and return results directly into FileMaker fields/UI.

3) Real, high-impact use cases (with ROI potential)

- Automated customer response drafts — generate a first draft for support tickets; human reviews before send. Saves time and speeds SLAs.

- Semantic document search — find the right contracts, manuals, or images using meaning instead of exact keywords — huge time savings for knowledge workers.

- Intelligent data entry & classification — auto-extract entities (names, dates, amounts) from uploaded PDFs or emails and populate records. Reduces manual errors.

- Private knowledge-base chatbots — a secure Q&A assistant trained on company docs (internal manuals, policies) for onboarding and support — cheaper than hiring a dedicated SME.

- Smart reporting & summarization — auto-summarize long reports or meeting notes for managers; generate executive summaries.

4) Implementation playbook — step-by-step (practical)

Below is a developer/admin playbook you can follow to add AI to a FileMaker solution.

Step 0 — goals & governance

- Define measurable goals (e.g., reduce ticket triage time by 40%).

- Identify privacy level of the data (sensitive PII? customer secrets?). Choose private LLMs if needed.

Step 1 — prototype on a narrow use case

- Pick one workflow (e.g., summarize inbound emails). Build a small model of the flow in a sandbox. Use canned data.

Step 2 — choose a model provider

- Options: commercial cloud LLMs (fast to start) or self-hosted/local models (better privacy/cost control). FileMaker supports multiple providers and can call them via the new script steps or through Claris Connect.

Step 3 — data prep & private knowledge base

- Create a secure knowledge base: sanitize PII, create chunked documents for embedding, and apply access controls. FileMaker 2025 supports private KBs to limit data leakage.

Step 4 — write FileMaker scripts

- Use the Generate Response from Model script step to send prompts and capture outputs. Combine this with semantic-find script steps for retrieval-augmented generation (RAG).

Step 5 — human-in-the-loop & QA

- Always surface AI outputs for review in high-risk flows (customer messages, legal summaries). Track corrections to improve prompts and functions.

Step 6 — monitor, measure, iterate

- Log API usage, latency, costs, and accuracy. Use a small dataset of golden examples to measure drift and model performance.

5) Example: pseudocode / script logic (conceptual)

This is intentionally conceptual — exact script names and parameters are available in FileMaker’s docs and script workspace.

- User triggers “Summarize Document” on a record.

- Script:

ExtractTextFromContainer→ store as $docText. - Script step:

CreateEmbedding($docText)→ store vector in local index. - Script step:

Generate Response from Model(prompt: "Summarize this for a manager: " & $docText, model: 'chosen-provider')→ store in SummaryField. - UI: show generated summary, with Approve / Edit / Reject buttons. On Approve, push to external system via Claris Connect.

6) Best practices & hard lessons from early adopters

- Prompt engineering matters. The same prompt can produce wildly different results; store and version prompts.

- Human review is essential at first. Automate low-risk tasks first; require human sign-off for anything customer-facing.

- Limit exposure of sensitive data. Don’t send raw PII to 3rd-party models unless contract & controls exist — consider on-prem/self-hosted LLMs.

- Cache & rate-limit. Cache repeated queries and batch operations to reduce costs and latency.

- Version your knowledge base and embeddings. Keep track of which snapshot of documents the model can access.

7) Security, privacy & compliance — what to watch

- Data residency & contracts: commercial model providers may process data in unpredictable regions — check vendor agreements. If that’s a problem, prefer self-hosted models or the private knowledge-base options in FileMaker.

- Access controls: protect who can trigger AI steps or view generated outputs. Use FileMaker’s privilege sets and Claris platform access controls.

- Audit logging: keep logs of prompts/responses (redact PII). Log who approved or published AI outputs.

8) Limitations & realistic expectations

- Hallucinations still happen. LLMs can invent facts; verification is required.

- Cost & latency tradeoffs. Generative steps have variable cost and response times; architect for async/batch where possible.

- Not a silver bullet. AI augments workflows but doesn’t replace domain expertise.

9) Measuring success (KPIs you can use)

- Time saved per task (manual → AI-assisted).

- Reduction in turnaround time (e.g., ticket reply SLA).

- Accuracy (%) of auto-classification vs. human label.

- Cost per successful automation (API cost minus savings).

- User satisfaction (surveys after AI-assisted actions).

10) Future outlook (what to expect from Claris & FileMaker)

Claris is positioning FileMaker as a low-code platform that embeds AI primitives so everyday custom apps can become “intelligent” on demand. Expect deeper RAG patterns, more connectors to model providers, and tighter cross-product capabilities (Studio + Connect + FileMaker) — making it easier to expose AI-enhanced FileMaker data on the web and in automations. For sensitive use cases, look for growing guidance and tooling around private model hosting and compliance.

Conclusion — Should you add AI to your FileMaker apps now?

Yes, but begin wisely and modestly. Keep people informed and give priority to high-value, low-risk workflows (triage, semantic search, and summaries). For quick prototyping, use FileMaker’s built-in AI script steps. If cost or privacy become an issue, consider self-hosted model options. With careful design and monitoring, AI will turn repetitive work into measurable productivity gains — delivering the “smarter workflows, faster results” promise.