What slows down FileMaker solutions, what to avoid, and best practices to fix or prevent them. Useful if you’re designing, optimizing, or maintaining a FileMaker database.

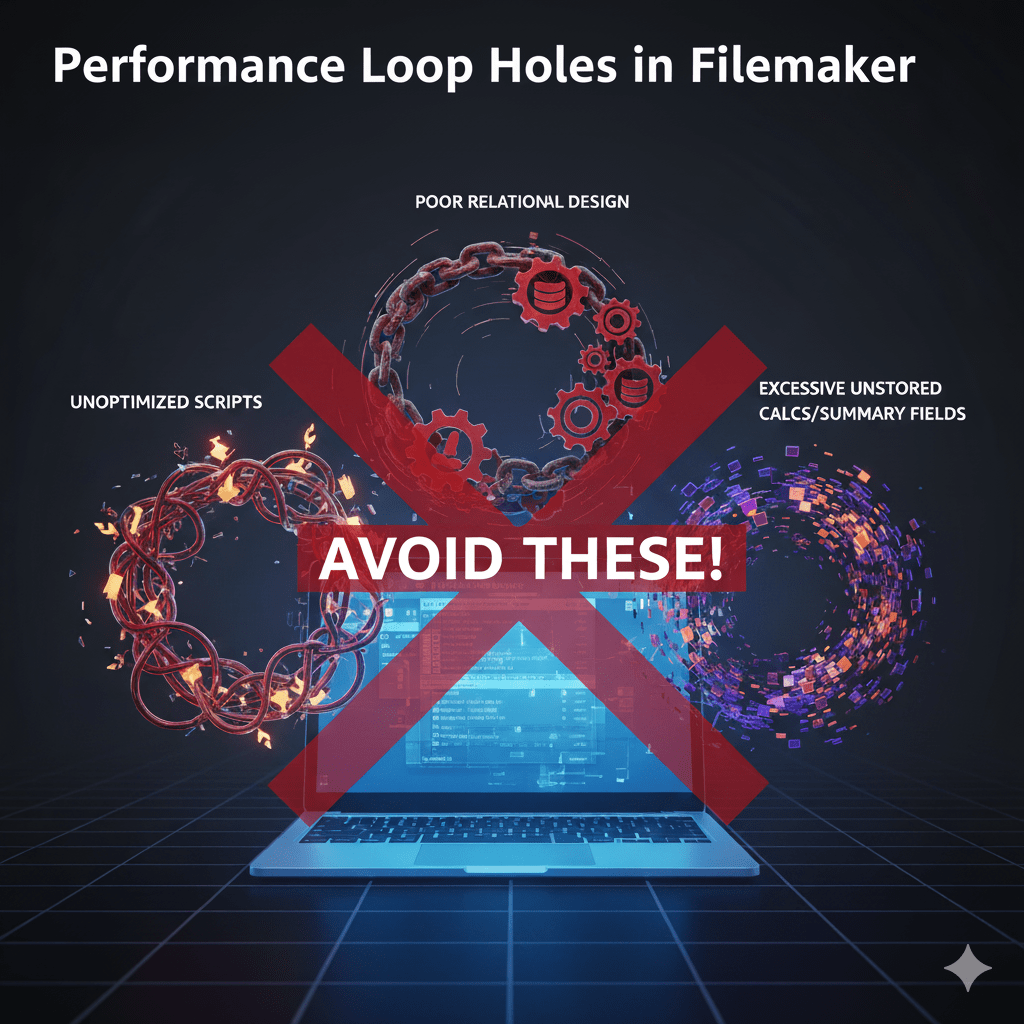

What Causes FileMaker to Be Slow — Common Performance Bottlenecks

These are the typical weak spots (“loops” where performance degrades) in FileMaker systems, especially when they scale or are used over networks:

- Too Many Fields / Wide Tables

- When tables have a lot of fields — especially if many are unstored calculations, summaries, or container fields — then every time a record is loaded or layout rendered, all those fields may be considered.

- Even if a layout shows just one or two fields, FileMaker may still process metadata tied to all fields in the table.

- Complex Layouts with Many Objects

- Layouts with many portals, objects, graphics, conditional formatting, etc., slow rendering time. The more things to draw or conditionally compute the visibility of, the more processing time needed.

- Also layouts with summary fields from related tables or many calculations displayed can cause delays.

- Unstored Calculations & Heavy Use of Summary/Calculation Fields

- Unstored calculations recalc every time they are referenced. If they’re used in many places (layouts, scripts, relationships), this adds up.

- Summary fields over related tables or “deep” relationship graphs can be expensive to compute.

- Over-complicated Relationships / Too Many Table Occurrences

- If your relationship graph is messy (lots of joins, complex or many TO’s), the system spends more time resolving relationships.

- Portal filtering + complex joins can be slow.

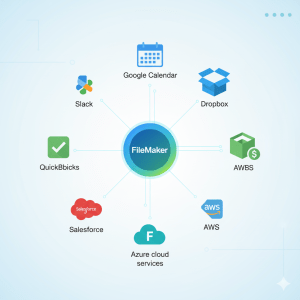

- Frequent Remote Calls, WAN Latency

- When clients (or WebDirect / Data API) make many small requests back and forth to the server, especially across slow networks, performance suffers dramatically. Remote calls over WAN are expensive.

- Script steps that fetch or modify records in loops, or many Commit operations, exacerbate this.

- Inefficient Scripts & Loops

- Scripts that contain nested loops, or loops over large found sets with many field operations, can be very slow. Especially if the loop causes many screen redraws or record commits.

- Also, triggers (OnRecordLoad, OnObjectModify, etc.) that fire too often and do heavy work are a common problem.

- Poor Server Hardware / Mis-configured Server Environment

- If the FileMaker Server shares hardware with other server tasks (file server, print server, email, etc.), performance suffers.

- Using slow or non-SSD disks, network storage with latency, inadequate RAM, disk I/O bottlenecks.

- Excessive FileMaker Server Tasks During Peak Usage

- Running backups, scheduled scripts, imports/exports during times of heavy use can cause contention.

- Large temp files or misuse of temporary folders can slow things down.

- Too Many Indexes or Wrong Indexing

- Fields that aren’t indexed but are frequently used for finds or sorts slow down searches. But having too many indexes, or indexes on seldom-used fields, can bloat resources and slow things (index maintenance, storage, etc.).

- Excessive Use of Script Triggers and Client-side Processing

- Triggers that run on layout load, object modify, record load can be helpful, but if they do heavy processing they can degrade user experience.

- Running scripts on the client when they would be better on the server (especially for batch or heavy operations) leads to unnecessary latency.

What to Avoid / Design Mistakes

To prevent the loopholes above, here are what you should avoid when designing or extending FileMaker solutions:

- Designing tables with too many fields, especially when many are calculated or summary fields not needed all the time.

- Putting large, complex unstored calculations on layouts or in relationships.

- Layouts that have many graphical or decorative elements, many portals, overly complex conditional formatting.

- Heavy use of script triggers without controlling when they fire or what they do.

- Running scripts that loop through thousands of records on the client side, or with many remote calls instead of offloading to the server.

- Using slow or unstable network connections for server-client communication without batching or caching.

- Putting backup, indexing, and heavy maintenance tasks during peak usage.

- Using generic “catch-all” value lists or global relationships that include many records or tables unnecessarily.

- Ignoring server hardware limitations; assuming more users + more data size can be handled without scaling up server resources or optimizing design.

- Not monitoring logs, usage stats, or remote call logs; blindly adding features without testing performance.

Best Practices / How to Avoid the Loopholes

Here are actionable tips & strategies to optimise FileMaker solutions:

- Use Perform Script On Server (PSOS) for Heavy Processes

- Offload data-intensive operations (loops, batch updates, imports/exports) to the server.

- Ensure script context (layout/foundset etc.) is correctly set in server-side scripts.

- Minimize Unstored Calculations; Use Stored or Auto-enter Fields

- Where possible, precompute values instead of calculating on the fly.

- Use auto-enter or script triggered calculation to store values, only recalc when needed.

- Simplify Layouts / Reduce Layout Overhead

- Reduce the number of objects, portals, summary fields, conditional formatting, tooltips, etc., especially on layouts used often.

- Freeze window during looping to avoid visual redraw overhead.

- Optimize Relationship Graph

- Keep relationship structure simple; avoid deeply nested related tables unless absolutely needed.

- Use anchor-buoy layout pattern or limit table occurrences.

- Index Wisely

- Index fields that are frequently used for search, sort, or relationship matching.

- Remove/unindex fields not used for those purposes to reduce overhead.

- Batch Remote Calls; Reduce Network Traffic

- Combine data operations rather than doing many small calls in a loop.

- Use server-side logic or PSOS to reduce back-and-forth.

- Cache static data where possible.

- Archive or Split Data

- Move old/unneeded data to archive files, so “working” files are leaner.

- Split large files into smaller logical parts if possible.

- Use Efficient Script Design

- Avoid unnecessary commits inside loops; commit only when necessary.

- Avoid layout changes inside loops unless necessary.

- Limit script triggers’ usage (turn them off or disable conditionally when not needed).

- Use functions like

While()(introduced in FM 18+) to reduce or replace looping scripts where possible.

- Proper Server & Hardware Configuration

- Dedicated server hardware: fast SSDs, good RAM, network speed.

- Don’t overload the FileMaker server machine with backup agents, indexing services, antivirus scanning, or other heavy tasks.

- Monitor & Measure

- Use FileMaker’s Admin Console: Top Call Statistics, remote call logs.

- Profile performance (time scripts, measure find/search operations, etc.).

- Test under realistic load (network latency, concurrent users).

Practical Examples / Case Studies

- There’s a documented case where a script working on ~58,000 records was rewriting a find on an unstored calculation flag; they rewrote it (removing unstored calc) and optimized the logic, and execution time dropped from 5 hours to 6 seconds.

- WebDirect layouts with many summary fields suffered delays in FileMaker 19.5 vs earlier version because rendering related summaries took longer.

Summary

- Many of the problems arise from design decisions that appear innocuous at small scale but cause exponential slowdowns as data or usage grows.

- FileMaker is a powerful tool, but it has performance traps, such as unstored calculations, overly complex layouts, too many remote calls, and hardware/network limitations.

- You can avoid most performance flaws by adhering to best practices, which include streamlining relationships, using server-side scripts, indexing carefully, monitoring, and creating lean layouts.